Thoughts and Reflections on NIPS 2017

This year, the Conference on Neural Information Processing Systems (NIPS) saw its largest attendance to date, with over 8300 attendees and over 3200 papers submitted. It was held in Long Beach, California. It was also my first NIPS, and so as such, I wanted to document my thoughts and impressions on the conference, events, and notable research.

Disclaimer: The opinions and writings below are completely my own, and do not reflect the positions of any institutions I am affiliated with.

Conference

The conference proceedings itself could be compared to drinking from a fire hose. In the span of a week, most of the greatest minds in machine learning, cognitive psychology, and neuroscience were all in one place having rich discussions and featuring world class research. It was incredibly inspiring, yet humbling at the same time.

Because of the sheer number of submissions and attendees, most of the events and proceedings were run massively in parallel, which forced prioritization and compromise for every talk throughout the day. Unfortunate, yet unavoidable. Workshops however, provided a great venue to engage with presenters and deep dive on a few specific posters without feeling the pressure of burdening everyone else with my questions.

There is an incredible amount of industry interest in machine learning and AI. Virtually every company threw one or more lucrative (by academic standards) events throughout the week (Intel even brought Flo Rida to perform!). Some companies gave interviews and offers on the spot. Bloomberg compared the conference to professional sports drafting athletes. It certainly is a hot time to be doing machine learning.

There is an incredible amount of industry interest in machine learning and AI. Virtually every company threw one or more lucrative (by academic standards) events throughout the week (Intel even brought Flo Rida to perform!). Some companies gave interviews and offers on the spot. Bloomberg compared the conference to professional sports drafting athletes. It certainly is a hot time to be doing machine learning.

We hosted a casual meet-up for Pyro contributors and developers. It was attended by the core Pyro team, Pytorch developers, academic collaborators, and independent researchers. Thanks for coming out!

Talks

Some highlighted talks given during the conference. This includes tutorials, symposia, keynotes, workshops.

Reverse Engineering Intelligence with Probabilistic Programs

Josh Tenenbaum talked about reverse engineering intuitions and “intelligence” found in toddlers. Toddlers are able to perform basic reasoning and inference from a very early age. The goal of probabilistic programs is to model that cognitive process of modeling intuitive physics and psychology.

The approach is to treat intuitive physics as a pattern recognition problem. In other words, you only need to do a few number of approximate simulations to predict what will happen next. He showed interesting demonstrations of the models predicting events into the near future (e.g. a stack of blocks falling over).

Josh also talked about probabilistic program applications in inverse graphics. Inverse graphics is the problem of taking a 2D image and derendering it into its components (usually in 3D). Probablistic modeling/programming can also be applied to inferring the objectives of agents (“intuitive psychology”).

Learning from First Principles

Demis Hassabis gave a talk on AlphaZero and learning from first principles. He started with his usual talk of general intelligence and the accomplishments of AlphaGo. He then talked about AlphaZero, which was able to beat SOTA systems in Go, Chess, and Shogi after a day of training. The program was initialized with the same hyperparameters across all three games and zero knowledge of the game other than the rules.

Demis did acknowledge that these RL systems are very data inefficient (for comparison, AlphaZero played tens of millions of games compared to the tens of thousands played by a human professional in his/her life). He however, noted a key difference that humans learn from other humans and textbooks, which in it of itself, contains thousands of years of human knowledge from playing the game. He joked that a fair comparison could be made by training an agent from scratch and comparing it against an agent encoded with the knowledge in Go textbooks, and evaluate exactly how many games of self-play a book was worth.

Cognitively Informed AI

This was a really interesting workshop, and I’m disappointed I missed the talks by Tom Griffiths and Yoshua Bengio on meta-cognitive processes with rational models and learning higher level cognition from disentangled representations respectively. I did catch Matt Botnivick’s talk on meta-RL. Essentially he proposes using an RL system (e.g. dopamine system) to train another system (e.g. prefrontal cortex), which will be more efficient and better suited for the task at hand.

Hierarchical Reinforcement Learning

Pieter Abbeel gave a talk on hierarchical RL. He was enthusiastic about a hierarchical RL workshop since he himself has historically “not had a lot of success in this area”. Hierarchical RL is useful for solving problems like exploration, credit assignment, and transfer learning. He cites Peter Dayan and Geoff Hinton’s 1993 Feudal RL paper as one of the most promising approaches though there has not been much follow up since then. Essentially, it proposes a hierarchy of managers where the reward is passed downward onto submanagers. Pieter ventured that the eventual solution will consist of tens of layers.

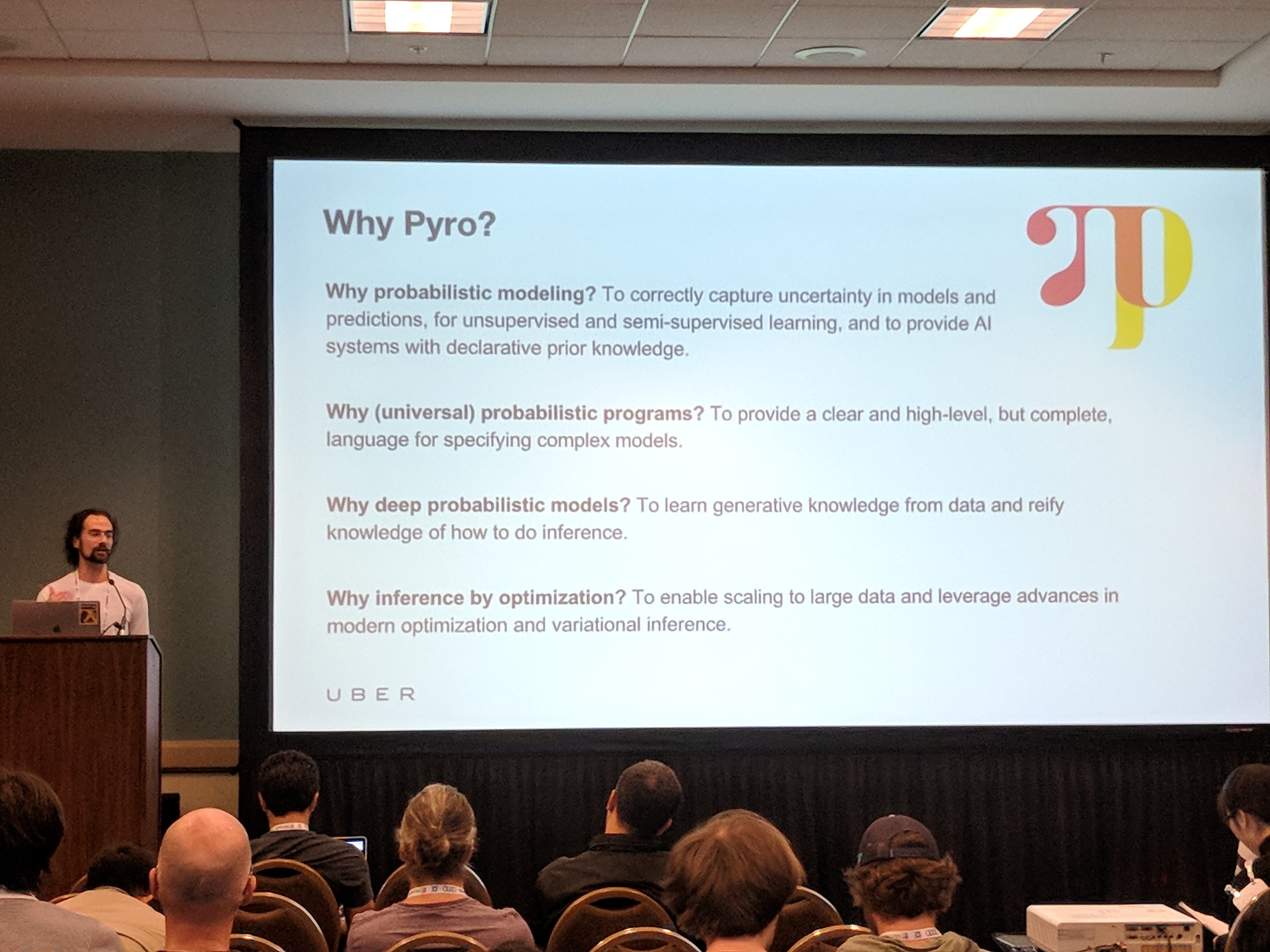

Pyro

Noah Goodman (along with Martin) gave a talk at the “Learn how to code a paper with state of the art frameworks” workshop about our universal probabilistic programming language, Pyro. You can find the jupyter notebook corresponding to the talk here.

Papers

Below is a small subset of papers I found interesting. I want to preface that the below is heavily subjected to selection bias, and I am fully aware that I may be omitting outstanding papers that deserve mention. I am also aware that this is machine learning after all and as such, these papers have been out for a while and may seem rather outdated to some readers. Nonetheless, they were published at NIPS so here they are:

Capsule Networks – Sabour et al [1] released a paper on Capsule Networks. This descended from an older idea by Hinton in which a group of neurons act as a “capsule” whose activity vector represents some property of interest. In a layered architecture, lower level capsules with strong signals can activate higher capsules (“routing by agreement”). They demonstrated SOTA results on MNIST and noted that multi-layer capsule networks perform better than standard CNNs on Multi-MNIST.

REBAR – In the world of probabilistic machine learning, variance reduction has always been the name of the game. One of the main drawbacks of probabilistic methods in high dimensions is high variance, and as such, recent techniques to reduce variance have made big strides in this area. Tucker et al [2] introduced REBAR, a combination of the REINFORCE estimator and Concrete relaxation of discrete variables to produce low variance, unbiased estimates. This crucially leverages the reparameterization trick, which allows low variance estimates of models with continuous variables. There has since been other research on top of this, notably RELAX [3], a more general form of the REBAR estimator, which is under review for ICLR.

Trust VI – Regier et al [4] introduced TrustVI, a black box variational inference method that uses trust region optimization and has magnitudes better performance than ADVI [5]. The algorithm roughly works as follows:

- construct a model of the objective in the trust region

- generate and evaluate proposal step

- update the trust region radius based on (2)

- rinse and repeat

Inverse Graphics – Wu, Wang, et al [6] introduced MarrNet, a neural net that is able to reconstruct 3D objects from 2.5D images. This was one among a plethora of papers from this group on inverse graphics and scene derendering. MarrNet consists of 3 parts: a 2.5D sketch estimator which takes a 2D image and predicts properties of the 2.5D sketch, a 3D shape estimator which infers the 3D shape, and a reprojection consistency function that enforces consistency between the sketch and the inferred object. They used 2.5D images since they are easy and cheap to construct and avoids the pitfalls of realistic images.

Neural Program Meta-Induction – Neural program induction methods are generally quite data hungry. Devlin, et al [7] proposed techniques for cross-knowledge data transfer which reduce the amount of data needed (ergo “meta-induction”). They train a single network which represents an entire (exponential) family of tasks and optimize over the latent variables conditioned on the I/O examples. At test time, they do a k-shot learning scenario where they give the model k examples from a completely new task it has not yet seen.

AlphaZero – David Silver gave a talk on AlphaZero [8], the “successor” to AlphaGo Zero. The paper is well worth a read if you are familiar with DeepMind’s previous achievements with AlphaGo (and especially if you are a chess enthusiast). The AlphaGo team was able to use the same RL algorithm with the same initialization and surpass previous SOTA in three different games.

Recurrent Neural Modules – Hafner et al [9] introduced a recurrent neural architecture “ThalNet” with a routing mechanism inspired by the thalamus in the human brain. The recurrent modules route their feature vectors to a routing center where they are combined, then read selectively by each module in the next time step. They demonstrate better performance and generalization than stacked GRUs.

Demos

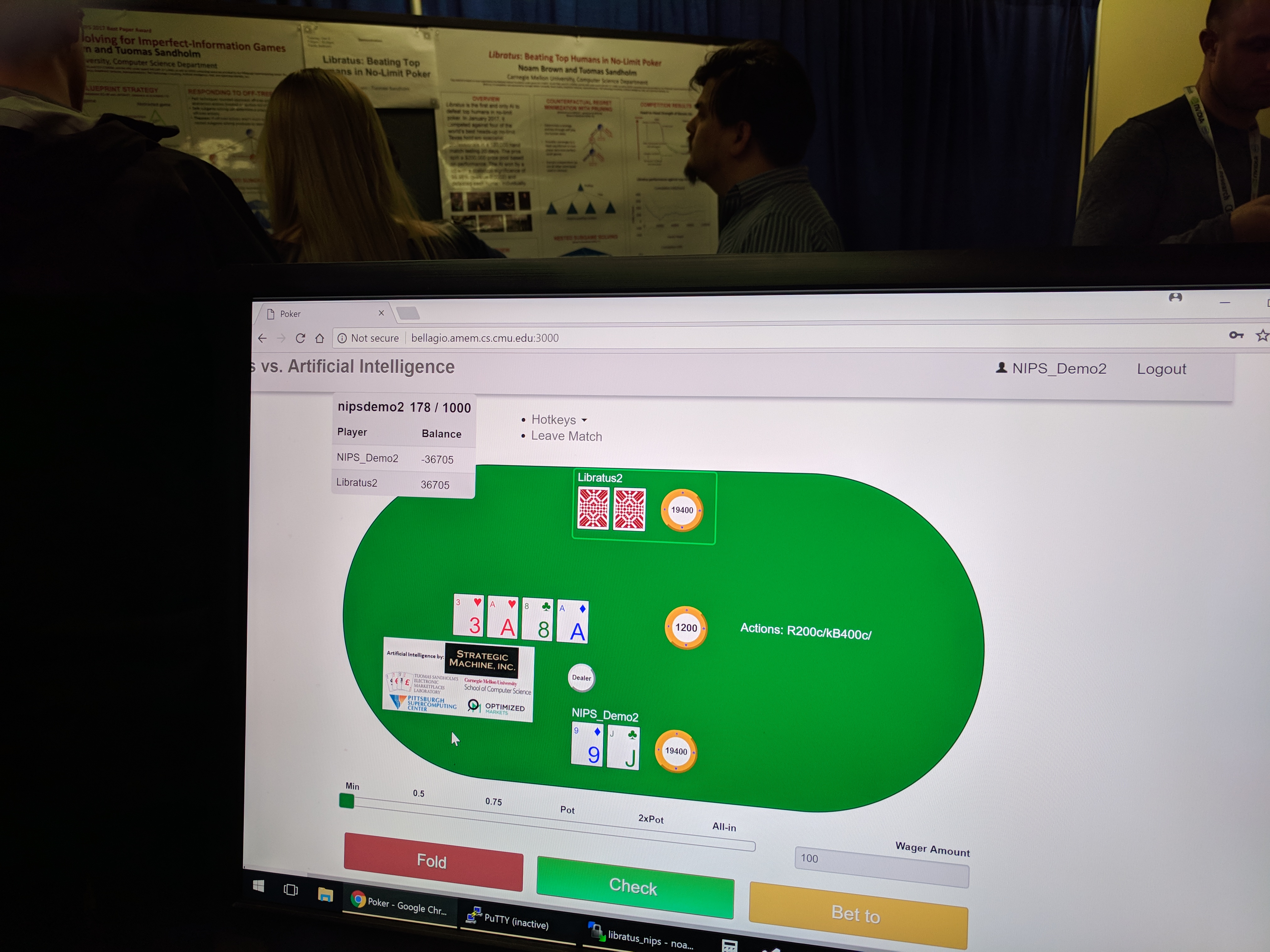

Researchers at CMU demonstrated Libratus, the heads-up no-limit Texas hold ‘em bot that convincingly beat top professionals. Played a few hands against it!

Researchers at CMU demonstrated Libratus, the heads-up no-limit Texas hold ‘em bot that convincingly beat top professionals. Played a few hands against it!

(Image taken from https://sites.google.com/view/one-shot-imitation)

(Image taken from https://sites.google.com/view/one-shot-imitation)

Pieter Abbeel’s group demo-ed meta-imitation learning with a robot arm that was able to learn by demonstration and generalize to other objects.

Events

Uber @ NIPS

Uber hosted a party (jointly between AI Labs and ATG) on the second night. It was completely full, and I had great conversations with some really interesting folks!

Uber Party venue

Uber Party venue

View from the party

View from the party

Pyro Margarita :)

Pyro Margarita :)

Tesla Fireside Chat

Tesla held a fireside chat with CEO Elon Musk, Head of AI Andrej Karpathy, and Head of Hardware Jim Keller. Some takeaways:

- Tesla is developing its own hardware for AI computation, that will presumably run in its cars

- Elon does not think fusion on earth is needed. The sun provides more than enough energy, and we are currently using “a pitiful amount”

- Elon thinks AGI will happen in the next 5-7 years and will be a greater threat to loss of jobs than autonomous cars

- Elon believes fully autonomous vehicles will be deployed in 1-2 years.

- Jim does not believe that we will hit hard limitations on hardware. “I’ve been hearing those claims every 10 years for 30 years. I’ve decided to stop listening altogether”

- Andrej thinks progress towards AGI is a step function and will happen suddenly/unexpectedly. If you look at the progress of AlphaGo Zero, there was a long period of (quiet) engineering effort and algorithmic advances, then reached superhuman status in 3 days.

References

[1] Sabour et al. Dynamic Routing between Capsules.

[2] Tucker et al. REBAR: Low-variance, unbiased gradient estimates for discrete latent variable models.

[3] Grathwohl et al. Backpropagation through the Void: Optimizing control variates for black-box gradient estimation.

[4] Regier et al. Fast Black-box Variational Inference through Stochastic Trust-Region Optimization.

[5] Kucukelbir et al. Automatic Differentiation Variational Inference.

[6] Wu, Wang, et al. MarrNet: 3D Shape Reconstruction via 2.5D Sketches.

[7] Devlin, Bunel, et al. Neural Program Meta-Induction.

[8] Silver, et al. Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.

[9] Hafner, et al. Learning Hierarchical Information Flow with Recurrent Neural Modules.

Notice anything incorrect? Please let me know!